Adding native JSON storage support in Drupal 7 or how to mix RDBM with NoSQL

Adding native JSON storage support in Drupal 7 or how to mix RDBM with NoSQL

I rencently read this very interesting article of an all time .Net developer comparing the MEAN stack (Mongo-Express-Angluar-Node) with traditional .Net application design.

Among other things he compared the tedious process of adding a new field in the RDBM model (modifying the database, then the data layer, then views and controllers) whereas in the MEAN stack it was as simple as adding two lines of code to the UI.

In this article we will see how to get native JSON document support in your Drupal entities (mixing traditional RDBM storage with NoSQL in the same database) and explore the benefits of having such a combination. Combining RDBM and NoSQL withthin the same storage engine can have it's benefits.

RDBM vs NoSQL

Everyone is talking now about NoSQL, Big Data, NewSQL, etc... and it can be sometimes difficult to see what is the best IT decision to make. Let's see how to determine if NoSQL is the choice to make.

| RDBM | NoSQL | |

| Nature of data | Simple structure that can be easily represented by a relational model. | Complex nested structures such as those ones used in geo-spatial, engineering parts or molecular modeling that fit with difficulty in a relational model. |

| Volatility of data model | Requires a get it first approach due to rigidity, and to know all the facts about the data model at design time. | Allows on-the-fly change of data structures, allowing greater flexibility when it comes to application development and evolution. |

| Application development | Database administrators and developers are clearly separated roles. | The distinction between DBA and developers is blurred and the software developer becomes the most important user. Developer requires high coding velocity and great agility in the application building process. |

| Performance and scaling | Difficult horizontal scaling and complex high availability (redundancy). | Designed to scale-out, at the expense of consistency. In a NoSQL database like MongoDB, for example, a document can be the equivalent of rows joined across multiple tables, and consistency is guaranteed within that object. |

| Analytics | Complex query and analysis. | Fast realtime data that comes from many upstream systems. |

The rage with NoSQL in web development now is probably caused because a big portion of internet projects are simple to very simple web applications (and many times with extremely constrained budgets) that will most benefit of the flexibility and agility provided by NoSQL storage. It has also serious applications where data integrity and complexity does not require ACID engines (usually NoSQL implementations are indeed ACID but at the document level) and scalability/performance is way more important. Remember that RDBM's were conceived in the early years of computing when they were targeting mission critical applications such as banking.

For serious projects proper data storage choice and design is crucial factor and should be taken very seriously if you do not want to put at stake future scaling and development of your application.

It can easily be seen that the usage of one model or another depends a lot in your priorities and use cases, but nothing keeps you from using both in the same application and within the same backend database engine. So you can get Best Of Both Worlds.

Native JSON support in your current database engine

There's a chance your traditional database engine is already offering NoSQL/Document Storage like capabilities.

In MS SQL server we have had the XML field type for a long time now. This field type allows you to store XML documents inside a MS SQL Server field and query it's contents using XPATH. And it's been around since the year 2005.

This datatype was a great candidate to store data for our experiment, until I found out that PHP has crap XML support. I could not find a properly working (in a reasonable amount of time such as 5 minutes or less) serialization/deserialization method to convert PHP objects to XML and the other way round.

So what about JSON native support? PostgreSQL already offers that. MS SQL Server users have been demanding support for JSON since 2011, but still MS has not made any progress in this. I guess they are too much focused on making SQL Server work properly (and scale) to support Azure, rather than improving or adding new features.

Thanks God MS SQL Server is extremely well designed and pluggable, and someone came out with a CLR port of the PostgreSQL Json data type at http://www.json4sql.com/

In the same way MS has never supported GROUP_CONCAT and we had to use more CLR to get it working.

What do you get with this native type?

- A binary storage format for faster querying without reparsing

- A fluent API for object creation and manipulation

- A rich API for querying JSON objects using XPath like query expressions

- A fast parser and deserializer for conversion to and from JSON and binary

- Aggregate functions for easy conversion of SQL result sets to JSON objects and arrays

- A rich set of table value functions for converting JSON objects and arrays to results sets

These functions open the door to complex and powerful native JSON manipulation and querying all handled by the database engine as if it were structured RDBM data. The developers of JSON4SQL claim that it is 20% faster and consumes 20% less storage when compared to the native XML type. That's good news too.

Adding JSON support to Drupal's database abstraction layer

Now that we have the capability of storing and querying JSON documents in our RDBM database, let's see how can we support this from Drupal 7 in the least disruptive way possible.

We would have loved to be able to use our JSON property just as 'serialized' field specification, but to do so we would have to modify the serialization/deserialization scattered all over Drupal (and contrib) and add another setting to tell Drupal to use json_decode/json_encode instead of serialize/unserialize.

No problem, we will tell Drupal that our JSON based property is a text field and do the encoding/decoding ourselves when we need it.

The database engine is internally storing JSON fields as binary data.

For INSERTS and UPDATES there is no issue as you can pass the string based document in your statement and the database engine will convert this to the binary internal representation. But when you try to retrieve a JSON field without modifying the the database abstraction layer you won't get the JSON document as expected but the binary data, so we will make a simple fix to the SelectQuery->__toString method code to retrieve this fields as JSON strings:

foreach ($this->fields as $alias => $field) {

$table_name = isset($this->tables[$field['table']]['table']) ? $this->tables[$field['table']]['table'] : $field['table'];

$field_prefix = (isset($field['table']) ? $this->connection->escapeTable($field['table']) . '.' : '');

$field_suffix = '';

$field_name = $field['field'];

$field_alias = $field['alias'];

if (isset($field['table'])) {

$info = $this->connection->schema()->queryColumnInformation($table_name);

// If we are retrieving a JSON type column, make sure we bring back

// the string representation and not the binary data!

if(isset($info['columns'][$field_name]['type']) && $info['columns'][$field_name]['type'] == 'JSON') {

$field_suffix = '.ToString()';

}

}

$fields[] = $field_prefix . $this->connection->escapeField($field_name) . $field_suffix . ' AS ' . $this->connection->escapeField($field_alias);

}

The previous code will only work in the 7.x-2-x MS SQL Server version driver for Drupal. You can take a similar approach on Postgre and Oracle backends.

Using JSON based fields from Drupal

First of all we are going to add a storage property (field) to the node entity with the name extend. Our aim is going to be able to store new "fields" inside this JSON field without having to alter the database structure.

To do so, implement an update to alter the database structure (you can use hook_update for this) :

<?php

namespace Drupal\cerpie\plugins\updates;

use \Drupal\fdf\Update\UpdateGeneric;

use \Drupal\fdf\Update\IUpdate;

class update002 extends UpdateGeneric implements IUpdate {

/**

* {@inheritdoc}

*/

public static function Run(&$sandbox) {

if (!db_field_exists('node', 'extend')) {

db_add_field('node', 'extend', array(

'type' => 'text',

'length' => 255,

'not null' => FALSE,

// Tell the native type!

'sqlsrv_type' => 'JSON'

));

}

}

}

Then with a few hooks let's expose this new property:

/**

* Implements hook_schema_alter();

*/

function cerpie_schema_alter(&$schema) {

$schema['node']['fields']['extend'] = array(

'type' => 'text',

'length' => 255,

'not null' => FALSE,

);

}

/**

* Implements hook_entity_property_info_alter(&$info);

*/

function cerpie_entity_property_info_alter(&$info) {

$info['node']['properties']['extend'] = array(

'label' => 'JSON Extend',

'description' => 'Store aditional JSON based data',

'type' => 'text',

'sanitize' => 'check_plain'

);

}

That's basically everything you need to start storing and retrieving data inside a node in JSON format.

To store something inside the JSON document:

// Fields to store inside the JSON document.

$mydata = array('field1' => 'data1', 'field2' => 'data2');

$e = entity_create('node', array ('type' => 'curso'));

// Specify the author

$e->uid = $user->uid;

$e->extend = json_encode($mydata, JSON_UNESCAPED_UNICODE);

// Create a Entity Wrapper of that new Entity

$entity = entity_metadata_wrapper('node', $e);

$entity->save();

Imagine we now wanted to add some additional data to the node storage (field3 in the example), no need to alter the database schema:

$e = node_load($nid);

$mydata = json_decode($e->extend);

// Fields to store inside the JSON document.

$mydata['field3'] = 'data3';

$e->extend = json_encode($mydata, JSON_UNESCAPED_UNICODE);

// Create a Entity Wrapper of that new Entity

$entity = entity_metadata_wrapper('node', $e);

$entity->save();

Up to now there's nothing new we could not have done with a string based storage field. The power is unleashed when we are able to query/retrieve the JSON based data atomically.

What he have now is just something that looks like text based storage, but is natively backed by a JSON data type.

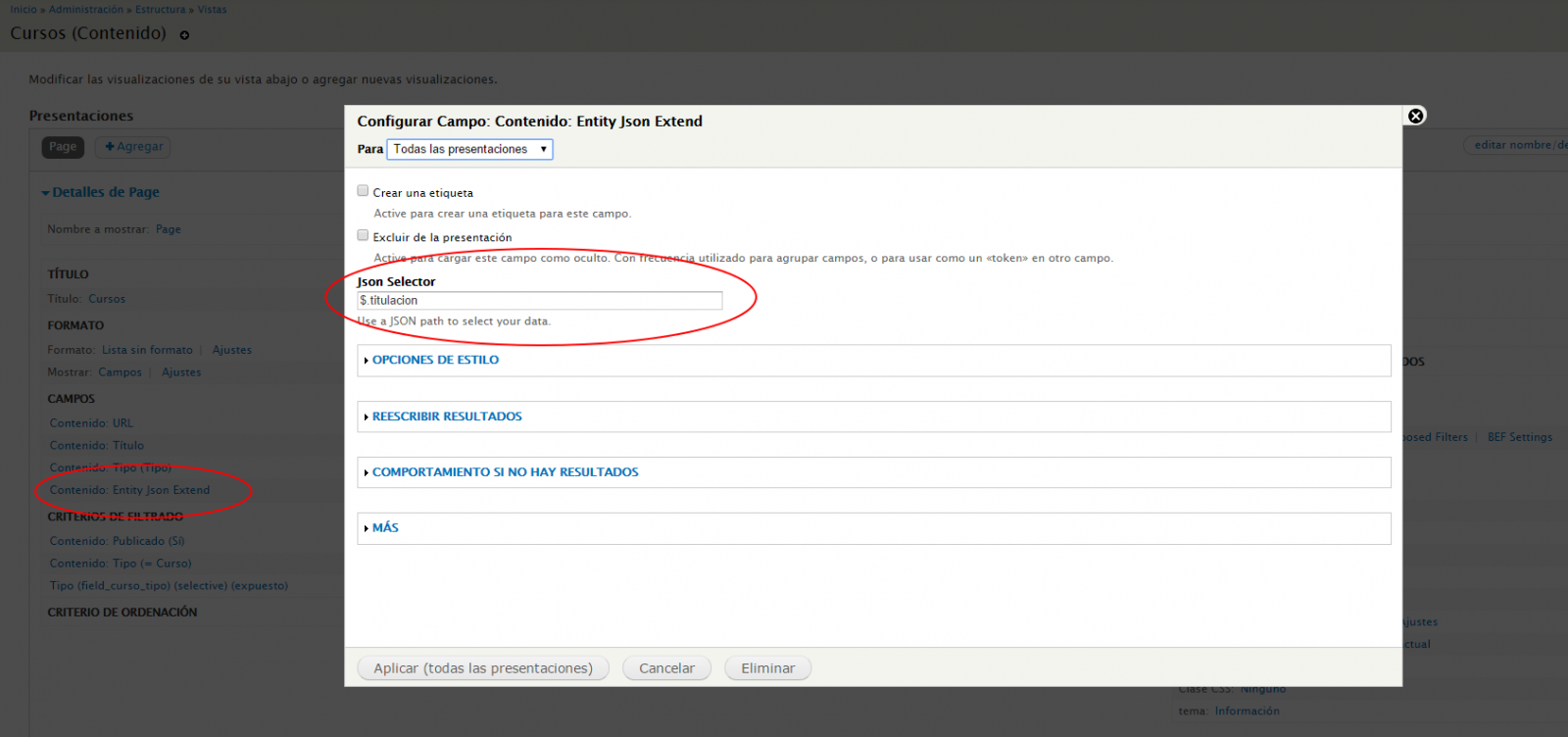

Exposing JSON based fields to Views

We are going to create a views field handler that will allow us to expose the json based fields as regular fields (sort of - remember this is a proof of concept).

Tell views that our module will be using it's API:

/**

* Implements hook_views_api().

*/

function cerpie_views_api() {

return array('api' => 3);

}

Create a *.views.inc file with that consumes the hook_views_data_alter:

function cerpie_views_data_alter(&$data) {

$data['node']['extend'] = array(

'title' => t('Entity Json Extend'),

'help' => t('Entity Json Stored Extended Properties'),

'field' => array(

'help' => t(''),

'handler' => '\\Drupal\\cerpie\\views\\JsonExtendDataFieldHandler',

)

);

}

Now implement our JsonExtendDataFieldHandler class:

<?php

namespace Drupal\cerpie\views;

class JsonExtendDataFieldHandler extends \views_handler_field {

/**

* Implements views_handler_field#query().

*/

function query() {

$this->field_alias = 'extend_json_' . $this->position;

$this->query->fields[$this->field_alias] = array(

// TODO: This is user input directly in the query!! find something better...

'field' => '[extend].Get(\'' . $this->options['selector'] . '\')',

'table' => NULL,

'alias' => $this->field_alias);

}

/**

* Implements views_handler_field#pre_render().

*/

function pre_render(&$values) {

}

/**

* Default options form.

*/

function option_definition() {

$options = parent::option_definition();

$options['selector'] = array('default' => '$');

return $options;

}

/**

* Creates the form item for the options added.

*/

function options_form(&$form, &$form_state) {

parent::options_form($form, $form_state);

$form['selector'] = array(

'#type' => 'textfield',

'#title' => t('Json Selector'),

'#default_value' => $this->options['selector'],

'#description' => t('Use a JSON path to select your data.'),

'#weight' => -10,

);

}

/**

* Implements views_handler_field#render().

*/

function render($values) {

return $values->{$this->field_alias};

}

}

We are done. Now you can easily retrieve any of the properties in the JSON document stored in the Extend field from the Views UI using a JsonPath selector.

A JsonPath selector is similar to XPATH and will allow you to retrieve any piece of data from within the JSON document.

About Performance

It is important to have a clear notion on what are the performance implications of such an approach (mixing RDBM with field based JSON document storage) before taking this any seriously. Of course, you should not expect this JSON based data to perform in the same way as it will do in a true NoSQL database such as MongoDB, and furthermore, this should underperform direct field based storage in a RDBM engine.

In this article they benchmarked the MS SQL Server XML data type (which should perform about 20% slower than the JSON storage we are using) against MongoDB document storage, with expected results that clearly indicate that you should not use this kind of storage if you plan to heavily rely on filtering/querying the data you store in these JSON documents. We don't know if the JSON data type supports indexing and that is a big concern.

Still, the development agility you get by having this additional storage type is worth the effort and we will be probably using this in some small upcoming projects. In the approach we used this storage is quite decoupled from Drupal's fields and that is indeed good because the data stored here won't bloat you Drupal installation, at the expense of not having (we could also work on that integration) out of the box "field" support for the data we store in this JSON documents. We've already got an extremely lightweight Views integration and support in databse abstraction layer.

Final Words

This was just a proof of concept but promissing experiment. With the given sample code you can easily implement a Views Filter or Sort Handler to the view based on any of the JSON stored fields. The database abstraction layer probably needs some more love to support querying JSON fields in a more user friendly way so that users do not need to learn the JsonPath notation. It is also not clear if the JSON document storage would be better placed in a field instead of being a property of the entity (and thus preventing it from being loaded during record manipulations).

You can of course directly use the JsonPath notation inside your queries to filter, sort or retrieve information from the database.

What are the benefits of storing data in this way?

Flexibility and speed when storing new fields (reduced time to market and application disruption). If a customer asks you to store some additional information in one of your entities, just drop it into the Json Document. If in the future you need to sort or filter using this field, no need to convert it to a real field or property because you can operate directly on the Json document. Depending on the use you give to the fields stored in the Json it can get to a point where performance will indicate to promote this to a real database field.

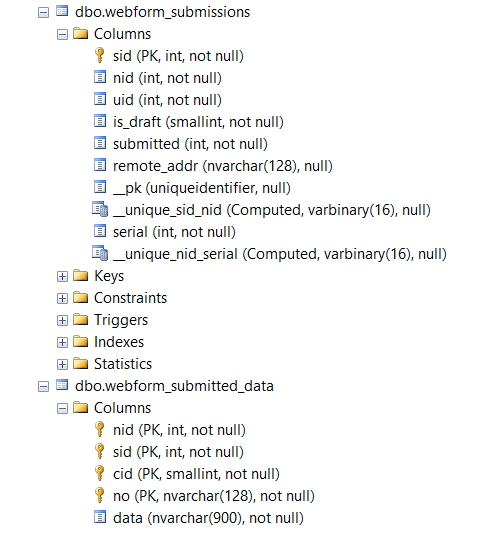

Improved support for flexible (unforecasted) data needs. Imagine how much the Webform module's database usage could be refactdored if they stored form submissions in JSON format instead of this "mess" (which is indeed a smart approach):

Going back to the original comparison of adding a new field to a MEAN stack application vs a traditiona RDBM based stack, you can use the here explained technique as a base to be able to easily support new data fields by simply touching the UI layer.

I think a very interesting contrib module could come out of this experiment (after solving some current design flaws and implemeting the missing functionality) that would allow users to attach a JSON document to any entity and have this information exposed to views. This potentially means no more hook_updates to alter the database schema for small/medium projects. And this module has the potential to be cross database portable (Postgre and MS SQL will work for sure, don't know about MySQL).

Knowing that donations simply don't work I wished there was a more robust commercial ecosystem for Drupal Contrib modules that would motivate to get this sort of ideas off the ground and into the contrib scene faster and with better support. This would also help Drupal have better site builder oriented quality modules like Wordpress has (fostering Drupal usage for quick site builds) and reduce the number of abandoned or half baked projects.

Add new comment